Generative models for 3D object synthesis have seen significant advancements with the incorporation of prior knowledge distilled from 2D diffusion models. Nevertheless, existing 3D synthesis methods still face some challenges, such as multi-view geometric inconsistency and low generation efficiency. This can be attributed to two factors: firstly, the lack of abundant geometric prior knowledge in optimization, and secondly, the entanglement between geometry and texture in conventional 3D generation methods. In response, we introduce MetaDreammer, a two-stage optimization approach that leverages rich 2D(texture) and 3D(geometry) prior knowledge. In the first stage, we focus on optimizing the geometric representation to ensure the geometric integrity and multi-view consistency of the 3D objects. In the second stage, we concentrate on fine-tuning the geometry and optimizing the texture to achieve a more refined 3D object. Through leveraging 2D and 3D prior knowledge in two stages, respectively, we alleviate the entanglement between geometry and texture, thus significantly enhancing optimization efficiency. Furthermore, we introduce non-main object suppression(NMOS) to prevent geometric collapse and propose 3D Knowledge Mining(3DKM) to improve the quality of 3D generation. MetaDreamer can generate high-quality 3D objects based on textual prompts within 20 minutes, and to the best of our knowledge, it is the most efficient text-to-3D generation method. Extensive qualitative and quantitative comparative experiments demonstrate that our method outperforms the state-of-the-art level in both efficiency and the quality of generated 3D content.

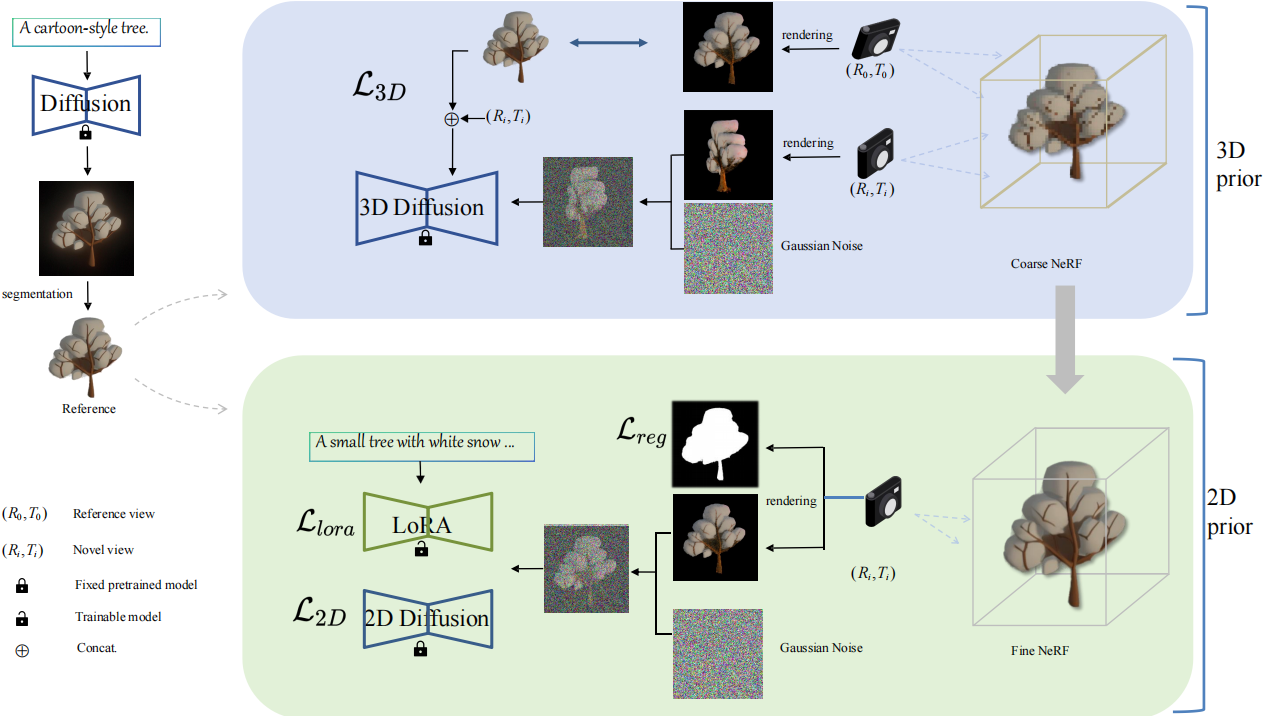

Overview architecture. MetaDreamer is a two-stage coarse-to-fine optimization pipeline designed to generate 3D content from arbitrary input text. In the first stage, we optimize a rough 3D model Instant-NGP guiding by a reference image and view-dependent diffusion prior model simultaneously. In the second stage, we continue to refine Instant-NGP using a text-to-image 2D diffusion prior model. The entire process takes 20 minutes. The entire optimization process only takes 20 minutes.

| Method | LatenNeRF | DremFusion | Magic3D | SJC | ProlificDreamer | MetaDreamer |

|---|---|---|---|---|---|---|

| Time(min) | 100 | 60 | 125 | 65 | 420 | 20 |

| iter | 20000 | 10000 | 20000 | 10000 | 70000 | 1300 |

Table:Comparison of training times between MetaDreamer and various text-based 3D methods. All experiments were conducted on a single NVIDIA A100 GPU. All experimental settings (number of iterations, random seeds, etc.) followed the official default settings of threestudio.

We compared the generation results of various methods under the same time (20 minutes). It can be observed that our method can generate high-quality 3D objects, while other methods can only produce a blurry outline.